Recently, deepfakes of actors Rashmika Mandana and Katrina Kaif went viral, sparking concern over the use of artificial intelligence. The Union Government sent a reminder to social media platforms about Section 66D of the Information Technology Act 2000. The section relates to punishment for cheating using computer resources. Whoever, by any means of a communication device or computer resources, cheats by personating shall be punished with imprisonment of either description for a term which may extend to three years and shall also be liable to a fine which may extend to Rs 1 lakh.

Some unknown players, hiding in the vast, dark world of the web, had created Mandanna’s video and Kaif’s image. They could be sitting in Timbuktu or Zaire, far out of the reach and jurisdiction of Indian law. Did they do this to cheat someone? What is the definition of cheating, and how is it applicable in this case? Who did they cheat? Did they earn any money from this? Does it come under defamation? Or, does it come under hurting the modesty of a woman? The law couldn’t be more vague than this. And how is this law going to prevent similar incidents from happening again?

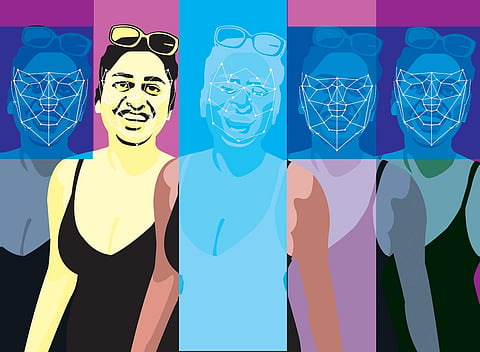

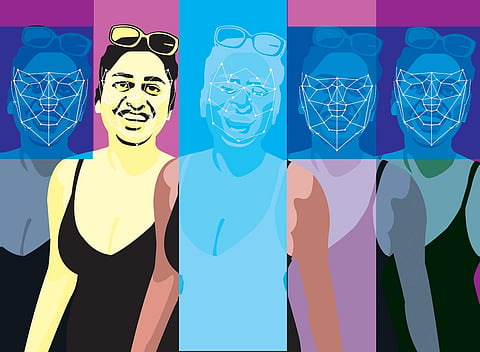

We are in 2023 now; the law was made 23 years ago. In the world of technology, that is as old as centuries. The technology is changing so fast that the legislative process, judiciary etc., will never be able to keep up with blitzkrieg speed. Anyone could be the subject of a deepfake. The deepfake porn industry is booming all over the world. Neither Google nor Microsoft’s search engine can filter fake from real. And unsurprisingly, women are primarily affected by the rising digitally altered porn. It is not just the celebrities who are victims of this dark industry.

Nearly 95 per cent of fake porn is non-consensual, meaning someone has ordered such clips of their colleagues, friends or love interests, and some firms would do such modifications from Instagram or Facebook profile photos for a small sum. More alarmingly, many AI tools and apps are widely and freely available now and require no technical expertise. These tools allow users to digitally strip off clothing from a picture or insert the faces into sexually explicit videos. In a country like India, where spurned lovers often attack their romantic interests with acid or knives, it is anyone’s guess how this kind of made-to-order porn is going to ruin lives. If that doesn’t scare you enough, consider this: there is no age restriction for accessing and using such apps, meaning there could be doctored child pornography made from the cute photos of young ones that we put on social media. If the deepfake has been created by a child in his or her teens, how is the law going to deal with it?

There are other graver concerns too. In Kerala, a video and audio clip recently went viral on social media, causing anger and frustration among many who watched. It was purportedly a speech by a prominent politician who was seen to be abusing a particular religion and its tenets. He filed a complaint against the said clip. The police investigation found that the video was lip-synced using AI with another audio clip made by a rationalist in a private talk. The rationalist stood by his criticism of the religion, but claimed he had nothing to do with any video or audio attributed to the politician. The clip’s origin is being investigated, but the chances of finding the perpetrators of mischief are remote. It is in continuous circulation even now and has most probably ruined the politician’s career.

Elections are approaching, so brace for the flood of AI-generated fake videos that could cause communal disturbances and social strife. Opponents can be easily defamed and destroyed using such false content. Mischievous minds can create communally sensitive videos to stir riots. This nightmare is going to unfold in countless ways.

AI is in its infancy now and has already opened frightening possibilities. We are living in a post-truth era where it’s almost impossible to separate chaff from wheat without using sophisticated tools. Such tools will be out of reach of ordinary people, and by the time the truth is revealed, the damage caused will be devastating. The law is never going to catch up with this Frankenstein. The only way to protect ourselves is to stop believing anything from social media or the internet unless it is verified and found true. Stop forwarding messages and break the chain of fakes.

Anand Neelakantan

Author of Asura, Ajaya series, Vanara and Bahubali trilogy

mail@asura.co.in