In the medieval legend of Hamelin, a town plagued by rats turns to a mysterious Piper. With a melodious tune, he lures the vermin away. But when the town reneges on its promise, the Piper returns—this time playing a sweeter, more dangerous song. The children follow him, entranced, and vanish beyond the hills, never to return.

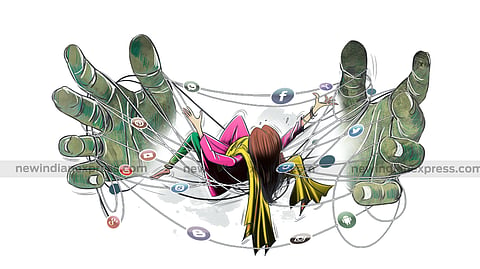

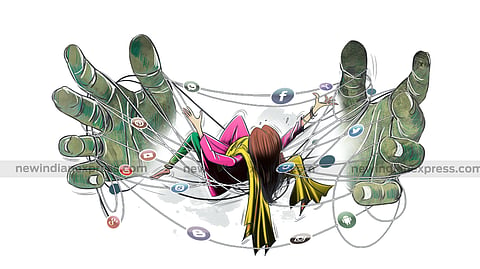

In the twenty-first century, we face a new Pied Piper. He wears no cloak and carries no flute. His music flows through glowing screens, algorithmic feeds, and conversational machines. Social media and artificial intelligence do not march children away in a single procession; instead, they beckon gently, persistently, invisibly—one swipe, one prompt, one response at a time.

On 10 December 2025, Australia became the first nation to acknowledge this danger in law, enforcing a nationwide ban on social media access for children under sixteen. Controversial though it was, the decision marked a historic shift: governments are beginning to recognise that digital platforms are not neutral tools, but powerful behavioural systems capable of shaping attention, emotion, and cognition. For India—a country with one of the youngest populations on earth—the Piper's tune is louder, more seductive, and far less regulated.

Why Australia stopped the music

Australia's intervention was not driven by moral panic or generational nostalgia. It was grounded in evidence. Studies revealed that nearly all children aged 10 to 15 were active on social media, many exposed to cyberbullying, grooming, self-harm content, and persistent anxiety. Crucially, policymakers did not blame parents or children. They pointed instead to the Piper's instrument itself: platforms engineered to maximise engagement through infinite scrolls, emotional triggers, algorithmic amplification, and dopamine-reward loops.

Adolescents, whose brains are still developing—particularly the regions responsible for impulse control, emotional regulation, and long-term reasoning—are neurologically ill-equipped to resist such enchantment. Expecting children to outwit systems designed by teams of behavioural scientists is like asking the children of Hamelin to ignore a song written precisely for their ears. Australia's decision recognised a basic asymmetry: the Piper knows exactly what he is doing; the children do not. India, by contrast, continues to place the burden on families and teachers, while allowing the music to play unchecked.

When AI teaches children to stop thinking

If social media is the Piper's tune to draw attention, generative AI is his deeper, more dangerous melody—the one that reaches into the architecture of thought itself. Unlike social platforms, which primarily influence what children see and feel, AI systems increasingly participate in how children think. They write essays, solve problems, generate ideas, analyse texts, and even engage in emotional dialogue. The Piper no longer merely leads children down a path; he begins to walk for them.

In 2025, researchers at the MIT Media Lab released a study provocatively titled Your Brain on ChatGPT. Using EEG scans, they compared students writing essays unaided, students using search engines, and students relying on generative AI. The findings were unsettling.

Students who relied on ChatGPT showed lower neural activation in regions linked to sustained attention, memory integration, and executive control—functions essential for learning and independent reasoning. When asked later to revise or reproduce their work without assistance, they demonstrated weaker recall and poorer conceptual ownership.

The researchers did not claim permanent damage. But they raised a red flag about cognitive offloading—the gradual outsourcing of mental effort during formative years. When thinking is repeatedly delegated to machines, neural pathways essential for judgment, creativity, and reasoning may never fully form.

In India, where ChatGPT is freely accessible and Google's Gemini is available through educational accounts, the Piper's tune reaches children early—often before foundational cognitive skills are in place. This is a new kind of brain drain.

Not the migration of talent across borders, but the quiet externalisation of thinking itself—to foreign-owned, cloud-based systems. When a generation learns to rely on external intelligence to perform its intellectual labour, questions of cognitive sovereignty and intellectual self-reliance can no longer be ignored.

The illusion of mastery

Education has always involved friction—struggle, revision, failure, and reflection. It is through this resistance that understanding is forged. Generative AI smooths this path too efficiently. With a single prompt, children can produce fluent essays, polished artwork, or functional code. The result looks like mastery, but often masks emptiness beneath. Educators increasingly describe this phenomenon as synthetic proficiency: output without understanding, fluency without depth.

The danger is not that AI exists in classrooms, but that it enters without structure, guidance, or age-appropriate limits. Used well, AI can be a powerful scaffold. Used indiscriminately, it becomes a crutch—one that weakens the very muscles education is meant to strengthen. In Hamelin, the children did not realise they were being led away. They were simply enjoying the song.

When the Piper becomes a confidant

The enchantment does not stop at cognition. Increasingly, children and adolescents turn to conversational AI for emotional support—seeking reassurance, advice, or companionship. While AI can simulate empathy, it cannot feel it. It cannot exercise moral judgment, clinical discernment, or human responsibility.

Psychological analyses have warned that repeated emotional reliance on AI can reinforce anxiety loops rather than resolve them. OpenAI itself has acknowledged that language models may inadvertently validate harmful thought patterns if users repeatedly seek reassurance.

For minors, the risks multiply. Emotional dependency on machines can displace real relationships, distort expectations of empathy, and blur the line between human care and algorithmic response. The Piper's song begins to replace the voices of parents, teachers, and peers.

India currently has no comprehensive framework governing age-appropriate access to either social media or generative AI. Our digital policies focus on data protection, cybercrime, and content moderation—not on developmental wellbeing or cognitive impact. This silence is dangerous.

Indian children are accessing smartphones earlier, using AI for homework and creativity, while teachers receive little training in AI literacy and parents struggle to keep pace with rapidly evolving technologies. The result may be a generation that is technologically fluent yet cognitively fragile—adept at producing outputs, but less practiced in reasoning, reflection, and resilience.

Silencing the Piper without breaking the flute

India need not replicate Australia's ban. But it must learn from its principle. Children require protection from systems not designed with their development in mind.

A child-centric digital policy would include age-based safeguards rather than blanket prohibitions, platform accountability that shifts responsibility from families to companies, structured integration of AI in education with clear boundaries, and curricula that teach cognitive and emotional literacy alongside technical skills. Above all, it would recognise a simple truth: children are not miniature adults. The technologies that empower adults can enchant—and endanger—the young.

In the legend, Hamelin learned too late that music can heal or destroy, depending on who controls it and who is listening. Today, we still have a choice. We can let the Piper play unchecked, or we can insist on rules that protect those most vulnerable to his tune. Protecting young minds does not mean rejecting technology. It means governing it with wisdom, humility, and foresight—before the children disappear beyond the hills.

(The author is the Founder and CEO of Edutecnicia Pvt. Ltd., Calicut.)